Unlocking the Future: A Beginner’s Guide to AI Fundamentals & Concepts

In an increasingly digitized world, Artificial Intelligence (AI) has moved from the realm of science fiction into our everyday lives. From personalized recommendations on streaming services to virtual assistants on our phones, AI is subtly, yet profoundly, reshaping how we live, work, and interact. But what exactly is AI? And how does it work?

If you’ve ever felt a little lost amidst terms like "Machine Learning," "Deep Learning," or "Neural Networks," you’re in the right place. This comprehensive guide will demystify the core concepts of AI, breaking down the fundamentals into easy-to-understand language. Whether you’re a curious beginner, a student, or a professional looking to grasp the basics, prepare to unlock the fascinating world of Artificial Intelligence.

What Exactly is Artificial Intelligence (AI)?

At its core, Artificial Intelligence (AI) refers to the simulation of human intelligence processes by machines, especially computer systems. These processes include learning, reasoning, problem-solving, perception, and understanding language.

Think of it this way: For centuries, humans have tried to build machines that could mimic our physical capabilities. Now, with AI, we’re building machines that can mimic our mental capabilities. It’s about teaching computers to "think" in ways that resemble human thought, or at least achieve similar outcomes.

Key characteristics of AI often include:

- Learning: Acquiring information and rules for using the information.

- Reasoning: Using rules to reach approximate or definite conclusions.

- Problem-solving: Finding solutions to complex problems.

- Perception: Using sensory input (like images or sounds) to understand the world.

- Language understanding: Comprehending and generating human language.

Why is it called "Artificial"? Because it’s not biological or natural intelligence. It’s intelligence created by humans, typically through algorithms and vast amounts of data.

A Brief History of AI: From Dreams to Reality

While AI feels like a modern phenomenon, its roots stretch back decades. The concept of intelligent machines has been explored in philosophy and fiction for centuries.

- 1950s: The term "Artificial Intelligence" was coined in 1956 at a Dartmouth College conference. Pioneers like Alan Turing (with his "Turing Test") laid theoretical groundwork. Early AI programs focused on problem-solving and symbolic reasoning.

- 1960s-70s: Optimism soared, leading to the development of "expert systems" that mimicked human experts in specific domains. However, limitations in computing power and data availability led to an "AI Winter."

- 1980s-90s: Renewed interest emerged with advancements in machine learning algorithms and the rise of the internet, providing more data.

- 2000s-Present: The "Big Data" revolution, coupled with significant increases in computational power (especially GPUs), has propelled AI into its current golden age. Machine learning, particularly deep learning, became the dominant approach, leading to breakthroughs in areas like image recognition, natural language processing, and game playing.

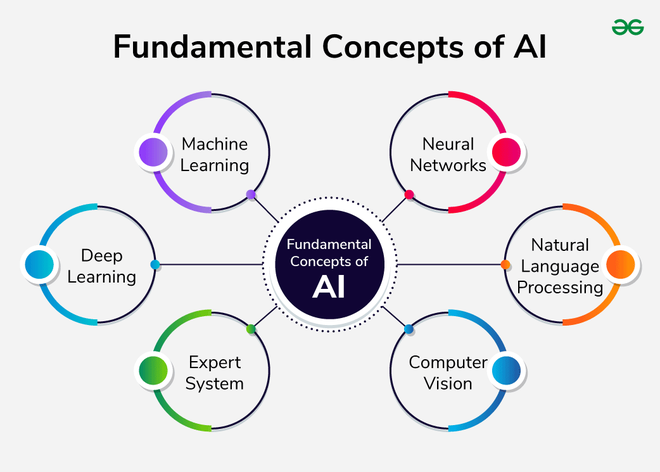

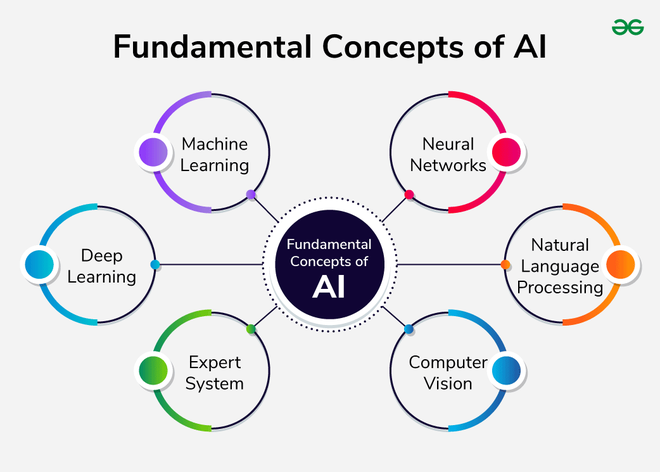

The Pillars of Modern AI: Machine Learning, Deep Learning, NLP & Computer Vision

While AI is the broad umbrella, several specific fields and technologies drive its progress. The most prominent among them are Machine Learning, Deep Learning, Natural Language Processing, and Computer Vision.

1. Machine Learning (ML): Learning from Data

Machine Learning (ML) is a subset of AI that enables systems to learn from data without being explicitly programmed. Instead of writing specific instructions for every possible scenario, you feed an ML model a vast amount of data, and it learns patterns and makes predictions or decisions based on those patterns.

Think of it like this: Instead of telling a child exactly how to identify a cat (e.g., "it has pointy ears, whiskers, a tail, and meows"), you show them hundreds of pictures of cats and other animals, pointing out which ones are cats. Eventually, the child learns to identify a cat on their own. That’s essentially what Machine Learning does.

How does ML work?

- Data: It starts with a large dataset (e.g., images, text, numbers).

- Algorithms: ML algorithms are the "rules" or mathematical procedures that the computer uses to find patterns in the data.

- Model: The output of the learning process is a "model" – a trained system that can now make predictions or decisions on new, unseen data.

Types of Machine Learning:

Machine Learning is broadly categorized into three main types based on how they learn:

-

a) Supervised Learning:

- Concept: The model learns from "labeled" data, meaning each piece of data comes with the correct answer or outcome.

- Analogy: Learning with a teacher. The teacher gives you questions and tells you the correct answers.

- Tasks:

- Classification: Predicting a category (e.g., spam or not spam, cat or dog, disease present or not).

- Regression: Predicting a continuous value (e.g., house prices, temperature, stock prices).

- Examples: Email spam detection, image classification, predicting customer churn, predicting sales figures.

-

b) Unsupervised Learning:

- Concept: The model learns from "unlabeled" data, finding hidden patterns or structures without any predefined answers.

- Analogy: Learning without a teacher. You’re given a pile of mixed objects and told to sort them into groups that make sense to you.

- Tasks:

- Clustering: Grouping similar data points together (e.g., customer segmentation, gene sequencing).

- Association: Discovering rules that describe relationships between variables (e.g., "people who buy bread often buy milk").

- Dimensionality Reduction: Simplifying complex data while retaining important information.

- Examples: Customer segmentation for marketing, anomaly detection (e.g., fraud), organizing large datasets.

-

c) Reinforcement Learning (RL):

- Concept: An "agent" learns to make decisions by performing actions in an "environment" and receiving "rewards" or "penalties" based on the success or failure of those actions. It’s like learning through trial and error.

- Analogy: Training a pet. You reward good behavior (a treat) and discourage bad behavior (no treat or a mild "no"). The pet learns which actions lead to rewards.

- Tasks: Decision-making in dynamic environments.

- Examples: Training robots to perform tasks, self-driving cars learning to navigate, game-playing AI (like AlphaGo defeating human champions), optimizing complex systems.

2. Deep Learning (DL): The Power of Neural Networks

Deep Learning (DL) is a specialized subset of Machine Learning that uses artificial neural networks with multiple layers ("deep" networks) to learn complex patterns from vast amounts of data. It’s behind many of the most impressive AI breakthroughs of recent years.

Think of it like this: Imagine a network inspired by the human brain’s structure. Our brains have billions of neurons connected in complex ways. Deep Learning attempts to mimic this structure with "artificial neurons" (nodes) arranged in layers. Each layer learns to recognize different features or patterns, passing its output to the next layer for further processing.

Key characteristics of Deep Learning:

- Artificial Neural Networks (ANNs): These are the core building blocks, consisting of interconnected nodes (neurons) organized in layers: an input layer, one or more "hidden" layers, and an output layer.

- "Deep" Networks: The term "deep" refers to the number of hidden layers in the neural network. More layers allow the network to learn more abstract and complex representations of the data.

- Feature Learning: Unlike traditional ML where features often need to be manually extracted from data, deep learning models can automatically learn and extract relevant features.

- Data Hunger: Deep learning models typically require massive amounts of data to perform well.

- Computational Power: They also require significant computational resources, often leveraging specialized hardware like GPUs (Graphics Processing Units).

Deep Learning is particularly powerful for:

- Image and Video Recognition: Identifying objects, faces, scenes, and activities in visual data.

- Speech Recognition: Transcribing spoken language into text.

- Natural Language Processing (NLP): Understanding and generating human language at a sophisticated level.

- Generative AI: Creating new content like images, text, and music (e.g., DALL-E, ChatGPT).

3. Natural Language Processing (NLP): Understanding Human Language

Natural Language Processing (NLP) is a branch of AI that focuses on enabling computers to understand, interpret, and generate human language in a way that is both meaningful and useful. It bridges the gap between how humans communicate and how computers process information.

Why is human language so hard for computers? Because it’s messy! Words can have multiple meanings (homonyms), context matters, sarcasm is hard to detect, and grammar can be complex. NLP aims to teach computers these nuances.

Common NLP Tasks and Applications:

- Sentiment Analysis: Determining the emotional tone or opinion expressed in text (e.g., positive, negative, neutral reviews).

- Machine Translation: Translating text or speech from one language to another (e.g., Google Translate).

- Chatbots and Virtual Assistants: Powering conversational AI systems that can interact with users (e.g., Siri, Alexa, customer service chatbots).

- Text Summarization: Condensing long documents into shorter, coherent summaries.

- Speech Recognition: Converting spoken words into written text (a key component of voice assistants).

- Spam Detection: Identifying unwanted emails based on their content.

4. Computer Vision (CV): Teaching Computers to "See"

Computer Vision (CV) is an interdisciplinary field of AI that trains computers to interpret and understand visual information from the world, much like the human visual system. This includes images and videos.

Think of it as giving computers "eyes" and the "brain" to understand what those eyes are seeing.

Key applications of Computer Vision:

- Object Recognition and Detection: Identifying and locating specific objects within an image or video (e.g., recognizing cars, pedestrians, traffic signs).

- Facial Recognition: Identifying individuals based on their facial features.

- Image Classification: Categorizing an entire image (e.g., "this is a picture of a beach").

- Autonomous Vehicles: Enabling self-driving cars to "see" their surroundings, detect obstacles, and navigate safely.

- Medical Imaging Analysis: Assisting doctors in detecting diseases from X-rays, MRIs, and CT scans.

- Quality Control in Manufacturing: Identifying defects on assembly lines.

- Augmented Reality (AR): Understanding the real world to overlay digital information.

Core Concepts and Terminology in AI

As you delve deeper into AI, you’ll encounter several recurring terms. Here are some fundamental ones:

- Algorithm: A set of well-defined, step-by-step instructions or rules that a computer follows to solve a problem or perform a task. In AI, algorithms are used to build models, process data, and make decisions.

- Data: The raw material for AI. It can be numbers, text, images, audio, or video. The quality and quantity of data are crucial for training effective AI models.

- Model: The output of a machine learning algorithm after it has been trained on data. It’s the "brain" that has learned patterns and can now make predictions or decisions on new data.

- Training: The process of feeding data to an AI algorithm so it can learn patterns and adjust its internal parameters to create a model.

- Inference: The process of using a trained AI model to make predictions or decisions on new, unseen data.

- Features: Individual measurable properties or characteristics of the data that an AI model uses to make predictions. For example, in a house price prediction model, features might include square footage, number of bedrooms, and location.

- Labels (in Supervised Learning): The correct answers or outcomes associated with the input data during training.

- Bias: A systematic error in an AI model’s predictions that leads to unfair or inaccurate outcomes for certain groups or situations. Bias can stem from biased training data, algorithm design, or societal biases.

- Hyperparameters: Settings that control the learning process of an AI model, set before training begins (e.g., the learning rate, the number of layers in a neural network).

Types of AI: By Capability and Scope

AI can also be categorized based on its capabilities and the scope of its intelligence:

-

Artificial Narrow Intelligence (ANI) / Weak AI:

- Concept: AI designed and trained for a specific, narrow task. It excels at that one task but cannot perform outside of its programmed domain.

- Current State: This is the only type of AI that exists today.

- Examples: Spam filters, recommendation systems, virtual assistants (like Siri, Alexa), chess-playing computers, facial recognition systems, self-driving cars. Each is highly capable within its specific niche but lacks broader understanding or consciousness.

-

Artificial General Intelligence (AGI) / Strong AI / Human-Level AI:

- Concept: Hypothetical AI that possesses cognitive abilities comparable to a human being across a wide range of tasks. It would be able to learn, understand, and apply intelligence to any intellectual task that a human can.

- Current State: Does not exist yet. It’s a long-term goal for many AI researchers.

- Characteristics: Would include common sense, creativity, the ability to generalize knowledge from one domain to another, and perhaps even consciousness.

-

Artificial Super Intelligence (ASI):

- Concept: Hypothetical AI that surpasses human intelligence and capability in virtually every field, including scientific creativity, general wisdom, and social skills.

- Current State: Purely speculative.

- Characteristics: Would be able to learn and adapt at an unprecedented rate, potentially leading to rapid technological advancements or posing existential risks, depending on its alignment with human values.

Where Do We See AI in the Real World? Everyday Applications!

AI is not just in research labs; it’s integrated into countless aspects of our daily lives:

- Smartphones: Voice assistants (Siri, Google Assistant), facial recognition for unlocking, predictive text, camera enhancements, app recommendations.

- Streaming Services: Personalized movie and music recommendations (Netflix, Spotify).

- E-commerce: Product recommendations, personalized shopping experiences, fraud detection.

- Navigation Apps: Real-time traffic analysis, optimal route suggestions (Google Maps, Waze).

- Social Media: Content moderation, personalized feeds, targeted advertising, facial tagging.

- Healthcare: Disease diagnosis, drug discovery, personalized treatment plans, medical image analysis.

- Finance: Fraud detection, algorithmic trading, credit scoring, personalized financial advice.

- Customer Service: Chatbots, automated call routing.

- Automotive: Self-driving cars, advanced driver-assistance systems (ADAS).

- Education: Personalized learning platforms, intelligent tutoring systems.

- Manufacturing: Predictive maintenance, quality control, robotic automation.

Why AI Matters: Benefits and Impact

AI’s growing influence is due to its profound potential to revolutionize various sectors and improve our lives in numerous ways:

- Increased Efficiency and Automation: Automating repetitive and time-consuming tasks, freeing up human workers for more complex and creative endeavors.

- Enhanced Decision-Making: Providing data-driven insights and predictions that lead to more informed and accurate decisions in business, healthcare, and beyond.

- Problem Solving at Scale: Tackling complex challenges that are beyond human cognitive capacity, such as climate modeling, drug discovery, or optimizing logistics networks.

- Personalization: Delivering highly customized experiences in areas like education, entertainment, and retail.

- Accessibility: Creating tools and technologies that assist individuals with disabilities, such as AI-powered translation for the deaf or navigation tools for the visually impaired.

- Innovation: Driving new discoveries and creating entirely new industries and services.

The Road Ahead: Challenges and the Future of AI

While the potential of AI is immense, it’s also a field with ongoing challenges and ethical considerations:

- Data Quality and Bias: AI models are only as good as the data they’re trained on. Biased or incomplete data can lead to unfair or discriminatory outcomes.

- Explainability (XAI): Understanding why an AI model made a particular decision, especially in complex deep learning models, can be challenging. This "black box" problem is crucial in critical applications like healthcare or finance.

- Ethical Implications: Concerns around privacy, job displacement, autonomous weapons, and the potential for misuse of AI are critical discussions.

- Safety and Robustness: Ensuring AI systems are reliable, secure, and operate safely in real-world scenarios.

Despite these challenges, the future of AI is incredibly promising. We can expect continued advancements in:

- More sophisticated and human-like interactions: AI will become even better at understanding and generating natural language.

- Greater integration into daily life: AI will become even more embedded in our homes, vehicles, and workplaces.

- Breakthroughs in AGI research: While AGI is still a distant goal, incremental progress will continue.

- Ethical AI development: Increasing focus on building AI that is fair, transparent, and aligned with human values.

Conclusion: Your Journey into AI Begins Now!

Artificial Intelligence is not a futuristic concept; it’s a rapidly evolving field that is already shaping our present. Understanding its fundamentals – from the core concept of machines learning from data to the specialized powers of deep learning, NLP, and computer vision – is no longer just for experts; it’s becoming a foundational literacy for everyone.

By grasping these basic concepts, you’ve taken the first crucial step in demystifying AI. The world of AI is vast and exciting, offering endless opportunities for innovation and discovery. As AI continues to evolve, your foundational knowledge will serve as a powerful tool to navigate and contribute to this transformative era. The journey has just begun!

Post Comment