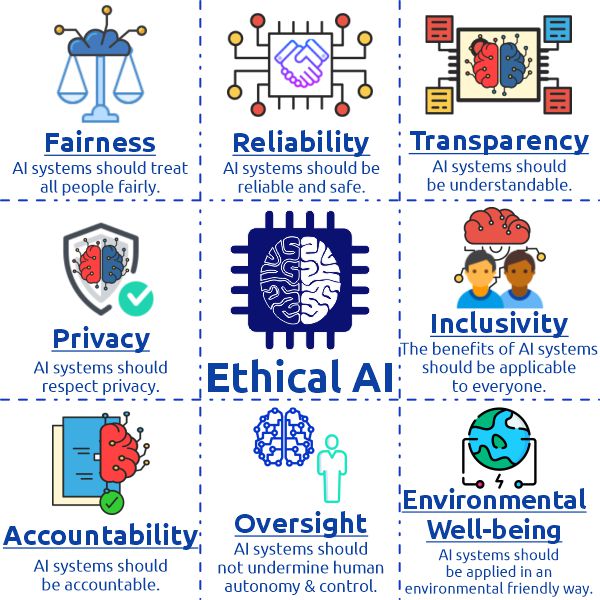

Ethical AI Development Guidelines: Building a Responsible Future with Artificial Intelligence

In an era where Artificial Intelligence (AI) is rapidly transforming every facet of our lives, from how we communicate and work to how we receive healthcare and manage our cities, its incredible power comes with an equally significant responsibility. AI holds the potential to solve some of humanity’s most pressing challenges, but without careful consideration and ethical guidance, it can also amplify existing societal biases, compromise privacy, and even cause unintended harm.

This is where Ethical AI Development Guidelines come into play. They are not just theoretical concepts; they are the crucial framework that ensures AI is built and deployed in a way that benefits humanity, respects individual rights, and fosters a trustworthy digital future.

This comprehensive guide will break down the core principles of ethical AI, explain why they are so vital, and offer practical insights into how developers, businesses, and policymakers can integrate these guidelines into their AI strategies.

What Exactly is Ethical AI?

At its core, Ethical AI refers to the design, development, and deployment of Artificial Intelligence systems that align with human values, promote well-being, and minimize harm. It’s about ensuring that AI is:

- Fair: Treats all individuals and groups equitably.

- Transparent: Its decisions can be understood and explained.

- Accountable: There are clear lines of responsibility for its actions.

- Secure: Protects data and resists malicious attacks.

- Beneficial: Serves humanity and contributes positively to society.

Think of it like building a bridge. You wouldn’t just build it without engineering standards, safety checks, and environmental considerations. Similarly, AI, with its vast societal impact, requires robust ethical standards to ensure it’s built safely, fairly, and responsibly.

Why Are Ethical AI Development Guidelines So Important?

The stakes are incredibly high. The decisions AI makes can affect everything from who gets a loan or a job interview to medical diagnoses and legal judgments. Without ethical guidelines, we risk:

- Amplifying Bias and Discrimination: If AI learns from biased data, it will perpetuate and even amplify those biases, leading to unfair outcomes for certain groups.

- Erosion of Trust: If people don’t understand how AI makes decisions or feel their privacy is compromised, they will lose trust in the technology, hindering its adoption and positive impact.

- Privacy Violations: AI systems often rely on vast amounts of data, raising concerns about how personal information is collected, stored, and used.

- Lack of Accountability: When an AI system makes a mistake or causes harm, who is responsible? Clear guidelines ensure accountability.

- Unintended Harm: Without careful foresight, AI can lead to unforeseen negative consequences, from job displacement to autonomous systems making critical errors.

- Legal and Reputational Risks: Companies failing to adhere to ethical AI practices can face significant legal penalties, public backlash, and damage to their brand.

By proactively addressing these concerns through ethical guidelines, we can unlock AI’s full potential while mitigating its risks, fostering innovation, and building a more equitable and efficient world.

The Core Pillars of Ethical AI Development

While different organizations may phrase them slightly differently, most ethical AI frameworks revolve around a common set of fundamental principles. Let’s explore these key pillars:

1. Fairness & Non-Discrimination

What it means: AI systems should treat all individuals and groups equally, without exhibiting unfair bias or discrimination. This means ensuring that AI outcomes are not systematically detrimental to specific genders, races, ethnicities, socioeconomic groups, or other protected characteristics.

Why it’s important: Bias in AI can lead to real-world harm, such as:

- Discriminatory hiring practices.

- Unfair loan approvals or insurance rates.

- Flawed facial recognition for certain demographics.

- Biased sentencing recommendations in the justice system.

How to achieve it:

- Diverse and Representative Data: Ensure the data used to train AI models accurately reflects the diversity of the real world and avoids over-representation or under-representation of specific groups.

- Bias Detection and Mitigation: Actively identify and correct for biases in data, algorithms, and model outputs throughout the development lifecycle.

- Fairness Metrics: Define and measure fairness using various metrics (e.g., equal opportunity, demographic parity) to ensure the model performs equitably across different groups.

- Regular Auditing: Continuously monitor AI systems in deployment for emerging biases and unintended discriminatory impacts.

2. Transparency & Explainability

What it means: AI systems should be understandable, and their decision-making processes should be clear to relevant stakeholders. This isn’t always about understanding every line of code, but rather being able to explain why an AI made a particular decision or prediction.

Why it’s important:

- Trust: People are more likely to trust systems they can understand.

- Accountability: It’s hard to hold a "black box" accountable.

- Debugging and Improvement: Understanding why an AI failed helps in fixing and improving it.

- Compliance: In many sectors (e.g., finance, healthcare), explainability is legally required.

How to achieve it:

- Interpretable Models: Prioritize using AI models that are inherently more understandable (e.g., decision trees) where appropriate, over highly complex "black box" models.

- Explainable AI (XAI) Techniques: Employ tools and techniques that help explain the outputs of complex AI models (e.g., LIME, SHAP values).

- Clear Communication: Provide users with clear, concise explanations for AI decisions, especially those that have significant impact (e.g., "Your loan was denied because of a high debt-to-income ratio and a low credit score").

- Documentation: Maintain thorough documentation of the AI’s design, training data, assumptions, and decision logic.

3. Accountability & Responsibility

What it means: There must be clear lines of responsibility for the actions and impacts of AI systems. When an AI makes a mistake or causes harm, there should be a human or a defined entity that can be held accountable.

Why it’s important: Without accountability, there’s no incentive to ensure AI is developed safely and ethically, and no recourse for those negatively affected. It prevents a "blame the algorithm" mentality.

How to achieve it:

- Defined Roles: Clearly assign roles and responsibilities for AI development, deployment, monitoring, and maintenance within an organization.

- Human Oversight: Ensure human oversight and intervention capabilities, especially for high-stakes AI applications.

- Impact Assessments: Conduct regular ethical impact assessments before and during AI deployment to anticipate and mitigate potential harms.

- Redress Mechanisms: Establish clear processes for individuals to challenge AI decisions and seek recourse if they believe they have been unfairly treated.

- Legal and Regulatory Frameworks: Advocate for and comply with evolving laws and regulations governing AI responsibility.

4. Privacy & Data Security

What it means: AI systems must be designed and operated in a way that respects user privacy and protects sensitive data from unauthorized access, use, or disclosure.

Why it’s important: AI often relies on vast datasets, including personal information. Mishandling this data can lead to identity theft, surveillance, discrimination, and a profound loss of trust.

How to achieve it:

- Data Minimization: Collect only the data absolutely necessary for the AI’s intended purpose.

- Anonymization & Pseudonymization: Where possible, use techniques to remove or obscure personal identifiers from data.

- Robust Security Measures: Implement strong cybersecurity protocols to protect data from breaches, including encryption, access controls, and regular security audits.

- User Consent: Obtain explicit and informed consent from individuals before collecting and using their data.

- Compliance with Regulations: Adhere to data protection laws like GDPR, CCPA, and others relevant to your operations.

- Privacy-Enhancing Technologies (PETs): Explore technologies like federated learning or differential privacy that allow AI to learn from data without directly exposing individual user information.

5. Human-Centricity & Control

What it means: AI should augment human capabilities, empower individuals, and serve human well-being, rather than replacing human judgment entirely or diminishing human autonomy. Humans should remain in control of critical decisions.

Why it’s important:

- Preserving Human Dignity: AI should enhance, not detract from, human agency and dignity.

- Avoiding Over-Reliance: Humans should understand AI’s limitations and not blindly trust its outputs, especially in complex or sensitive situations.

- Ensuring Ethical Alignment: Humans provide the ethical compass that guides AI.

How to achieve it:

- Human-in-the-Loop: Design AI systems to include human review and override capabilities, especially for high-consequence decisions.

- Meaningful Human Control: Ensure that humans can understand, predict, and reliably intervene in the AI’s operation.

- User Empowerment: Provide users with clear interfaces and options to manage their interactions with AI and control their data.

- Focus on Augmentation: Design AI to assist humans, taking on repetitive tasks and providing insights, thereby freeing humans to focus on more complex, creative, and empathetic work.

6. Safety & Robustness

What it means: AI systems must be reliable, secure, and perform as intended without causing unintended harm. They should be resilient to errors, malicious attacks, and unexpected inputs.

Why it’s important: An unreliable or easily compromised AI can lead to dangerous outcomes, especially in critical applications like autonomous vehicles, medical devices, or infrastructure management.

How to achieve it:

- Rigorous Testing: Conduct extensive testing, including stress tests, edge case analysis, and adversarial testing, to identify vulnerabilities and ensure reliable performance.

- Error Handling: Design AI systems with robust error detection and handling mechanisms.

- Security by Design: Integrate security considerations from the very beginning of the AI development lifecycle.

- Resilience to Attacks: Protect against adversarial attacks (where malicious actors try to trick the AI into making wrong decisions).

- Continuous Monitoring: Implement real-time monitoring to detect anomalies and performance degradation in deployed AI systems.

7. Beneficence & Sustainability

What it means: AI development should strive to create positive societal impact, contribute to human well-being, and consider its environmental footprint.

Why it’s important: AI has the potential to address global challenges like climate change, disease, and poverty. Ethical development encourages using this power for good.

How to achieve it:

- Purpose-Driven Design: Focus on developing AI applications that solve real-world problems and contribute to sustainable development goals.

- Environmental Impact Assessment: Consider the energy consumption and environmental impact of training and deploying large AI models.

- Accessibility: Design AI to be accessible and beneficial to a wide range of users, including those with disabilities.

- Social Impact Assessment: Proactively assess the potential social impacts (positive and negative) of AI systems before deployment.

Implementing Ethical AI: Practical Steps for Developers and Organizations

Developing ethical AI isn’t just about understanding principles; it’s about embedding them into the entire AI lifecycle.

-

Establish Clear Policies and Principles:

- Formulate a company-wide ethical AI policy that reflects your values and commitment.

- Translate these high-level principles into actionable guidelines for your AI teams.

-

Foster a Culture of Ethics:

- Training and Education: Provide regular training for all AI developers, data scientists, and product managers on ethical AI principles and best practices.

- Ethical Review Boards: Consider establishing an internal ethics committee or review board to scrutinize AI projects, especially those with high societal impact.

-

Integrate Ethics into the AI Lifecycle:

- Design Phase: Begin with ethical considerations. What are the potential harms? How can bias be mitigated from the start?

- Data Collection & Preparation: Ensure data sources are ethical, representative, and privacy-compliant.

- Model Development: Use interpretable models when possible, and implement bias detection and mitigation techniques.

- Testing & Validation: Conduct rigorous testing for fairness, robustness, and accuracy across different demographics.

- Deployment & Monitoring: Continuously monitor AI systems in the real world for unintended consequences, performance drift, and emerging biases.

-

Promote Diversity in AI Teams:

- Diverse teams (in terms of background, gender, ethnicity, and experience) are better equipped to identify and mitigate biases, and to consider a wider range of ethical implications.

-

Engage Stakeholders:

- Involve end-users, affected communities, and ethicists in the design and evaluation process to gain diverse perspectives.

-

Invest in Research and Tools:

- Support research into ethical AI, explainable AI (XAI), fairness metrics, and privacy-preserving technologies.

- Utilize available tools and libraries designed to help detect and mitigate bias or improve transparency.

Challenges in Ethical AI Development

While the goals are clear, achieving truly ethical AI is complex. Some challenges include:

- Defining "Ethical": Ethical principles can be subjective and vary across cultures and contexts.

- Trade-offs: Sometimes, optimizing for one ethical principle (e.g., accuracy) might conflict with another (e.g., fairness or transparency).

- Scalability: Applying rigorous ethical checks to every AI system at scale can be resource-intensive.

- Evolving Technology: AI capabilities are advancing rapidly, often faster than ethical frameworks can keep pace.

- Global Harmonization: Lack of unified international standards makes it difficult for global companies to navigate diverse regulatory landscapes.

The Future of Ethical AI

Ethical AI is not a destination but an ongoing journey. As AI becomes more sophisticated and pervasive, the need for robust ethical guidelines will only intensify. The future will likely see:

- More Sophisticated Tools: Development of more advanced tools for bias detection, explainability, and privacy preservation.

- Stronger Regulatory Frameworks: Governments worldwide will continue to develop and enforce AI-specific laws and regulations.

- Industry Standards: Increased collaboration among industry leaders to establish common ethical AI standards and certifications.

- Public Awareness and Education: Greater public understanding and demand for ethically developed AI products and services.

Conclusion

Ethical AI development is not an optional add-on; it is a fundamental requirement for building a responsible and beneficial AI-powered future. By prioritizing fairness, transparency, accountability, privacy, human-centricity, safety, and beneficence, we can ensure that artificial intelligence truly serves humanity and enhances our world, rather than creating new problems.

It requires a collective effort from AI developers, researchers, policymakers, businesses, and the public to ensure that AI is a force for good, built on a foundation of trust, integrity, and human values. The time to embed these principles is now, as we stand at the cusp of an AI revolution with the power to shape our tomorrow.

Frequently Asked Questions (FAQs) About Ethical AI Development

Q1: Is AI inherently unethical?

A1: No, AI itself is a tool or a technology. Its ethical implications arise from how it is designed, developed, and used by humans. Just like any powerful technology, AI can be used for good or ill, depending on the intentions and ethical considerations of its creators and deployers.

Q2: Who is responsible for ensuring AI is ethical?

A2: Responsibility is shared across many stakeholders:

- AI Developers and Data Scientists: Responsible for building ethical models and using unbiased data.

- Companies and Organizations: Responsible for establishing ethical guidelines, policies, and oversight.

- Policymakers and Governments: Responsible for creating regulatory frameworks and laws.

- Users and Society: Responsible for demanding ethical AI and holding creators accountable.

Q3: Can AI ever be completely free of bias?

A3: Achieving "zero bias" is extremely challenging, if not impossible, because AI learns from data that often reflects existing societal biases. The goal of ethical AI is to actively identify, measure, and mitigate biases to the greatest extent possible, and to continuously monitor for emerging biases in deployed systems. It’s about striving for fairness, not perfection.

Q4: How do ethical AI guidelines affect innovation?

A4: Far from stifling innovation, ethical guidelines can actually foster it. By providing a clear framework and instilling trust, they encourage the development of more robust, responsible, and widely accepted AI solutions. Companies that prioritize ethical AI are likely to build more sustainable products and gain a competitive edge in the long run.

Q5: What’s the difference between AI ethics and AI safety?

A5: They are closely related but distinct:

- AI Ethics focuses on the moral principles that should guide AI development and use, addressing questions of fairness, privacy, accountability, and societal impact.

- AI Safety focuses on preventing unintended or harmful behaviors from AI systems, especially powerful autonomous ones. This includes issues like robustness, control, and ensuring AI systems align with human goals to prevent catastrophic outcomes. Ethical AI often includes safety as a core component.

-u122l.png)

Post Comment